AI Model Monitoring, Explainability, and Continual Learning in Production

The December 12, 2024, ChatGPT outage, which impacted over 19,000 users for 30 minutes, highlights that even top technology companies face infrastructure challenges. While outages can’t be fully avoided, strong monitoring systems are key to fixing them. These systems can quickly spot and fix issues in AI infrastructure, systems, and models.

For instance, after a model goes live, there are three vital tasks to keep on top of, i.e., model monitoring, explainability, and continual learning. These practices are essential for maintaining the model’s performance, accuracy, and relevance over time. By consistently applying these strategies, we ensure that the model remains effective and up-to-date with the latest data and trends.

In this blog post, we will explore some practices, tools, and strategies for effectively monitoring AI models, interpreting model predictions, and facilitating to effectively monitor AI models, interpret model predictions, and facilitate ongoing learning and improvement.

Monitoring model performance

Model performance metrics provide insights into how well a model predicts outcomes, its precision in identifying specific categories, and its overall reliability. By continuously tracking these metrics, organizations can take proactive measures to retrain or update models as needed, maintain high levels of model quality, and ensure that the deployed models remain aligned with business objectives and ethical standards.

Some of the most commonly monitored model prediction metrics are:

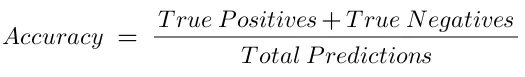

- Accuracy: The overall correctness of the model’s predictions, typically measured as the ratio of correct predictions to the total number of predictions. If an email spam detection system correctly classifies 950 emails out of 1,000 as either spam or not spam during evaluation, its accuracy would be 95%.

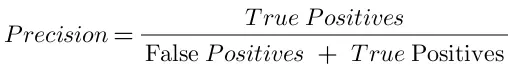

- Precision: The fraction of positive predictions that are truly positive (true positives divided by true positives plus false positives). For example, in the email spam detection system, if the model flags 100 emails as spam and 20 of them are mistakenly classified as spam (false positives), the precision would be 80%. This means that 80 out of the 100 flagged emails are actual spam.

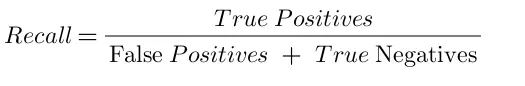

- Recall (sensitivity): The fraction of actual positives that are correctly identified by the model (true positives divided by true positives plus false negatives). For example, in an email spam detection system, if there are 120 spam emails in total and the model correctly identifies 100 of them as spam, the recall would be approximately 83.3%.

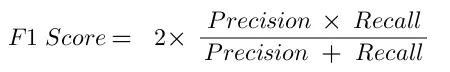

- F1 Score: The F1 Score is a performance metric for binary classification tasks that combines precision and recall into a single value, ranging from 0 to 1. A higher F1 Score indicates better performance. For instance, in the spam detection AI system has a precision of 80% and a recall of 83.3%, its F1 Score would be around 81.6%, indicating a balanced performance in identifying spam emails.

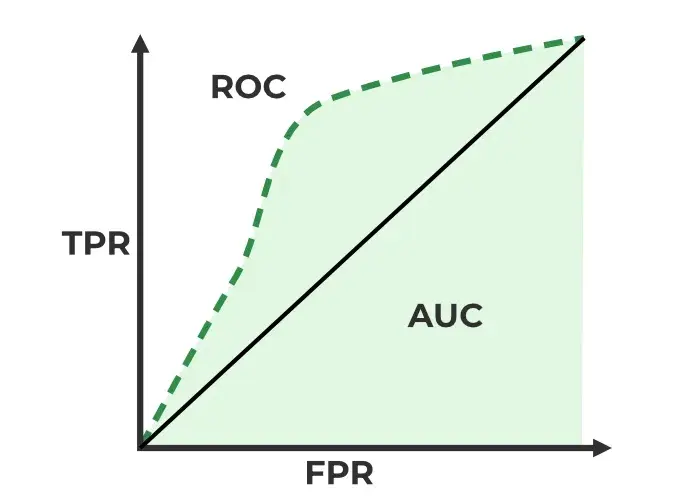

- Area Under the ROC Curve (AUC-ROC): A measure of the model’s ability to distinguish between positive and negative classes, plotted as a curve.

-

Log Loss (Cross-Entropy Loss): A measure of the uncertainty or confidence of the model’s predictions, commonly used for classification tasks. It measures the difference between the predicted probabilities and the actual outcomes, with lower log loss values indicating better model performance.

In the AI system designed to detect whether an email is spam, if the system predicts an email has a 90% chance of being spam, but it is actually not spam would result in a higher log loss, indicating poor confidence in its prediction.

-

Mean Squared Error (MSE) or Root Mean Squared Error (RMSE): Measures of the average squared or root squared difference between the predicted and actual values, commonly used for regression tasks. For example, in a spam detection system, if the model predicts a spam probability of 70% but the actual label is 100% spam, the RMSE quantifies this difference. An MSE of 0.09 means predictions deviate from actual probabilities by about 0.3 on average.

-

Confusion Matrix: A tabular representation of the model’s performance, showing the counts of true positives, true negatives, false positives, and false negatives.

-

Fairness Metrics: Metrics that evaluate the fairness and bias of the model’s predictions, such as demographic parity, equal opportunity, and disparate impact.

Apart from the above metrics, other critical metrics related to model performance and data distribution need to be continuously monitored to ensure the effectiveness of the deployed model. Further here, we will focus on covering three major AI model monitoring categories:

- Outliers

- Drifts

- Model predictions

What are Outliers?

Outliers refer to individual data points that are significantly different from other data points in the dataset.

Outliers examples:

- In a dataset of car prices, an outlier might be a car that costs $1 million, while the rest of the cars in the dataset cost less than $100,000.

- In a dataset of student test scores, an outlier might be a student who scored 100% on a test while the rest of the students scored between 60% and 90%.

- In a dataset of social media engagement metrics, an outlier might be a post that received 100,000 likes, while the rest of the posts received less than 1,000 likes.

Types of Outliers

Univariate outliers

These are data points that are significantly different from the rest of the data in a single variable or feature. They can be identified by looking at the distribution of values for that variable and finding points that lie an abnormal distance from the mean or median.

Example: In a dataset of car weights, if most cars weigh between 2,500 lbs and 4,000 lbs, a car weighing 8,000 lbs would be considered a univariate outlier for the weight variable.

Multivariate outliers

These are data points that appear outlying when considering multiple features or variables together. A point may not be an outlier in any single variable, but its combination of values across multiple variables makes it an outlier in the multivariate space.

Example: In a dataset containing features like engine size, horsepower, and fuel efficiency, a car with a small engine size (e.g., 1.6L), high horsepower (e.g., 300hp), and excellent fuel efficiency (e.g., 40 mpg) would be considered a multivariate outlier, as this combination of values is highly unusual.

Contextual outliers

These are data points that are considered outliers not based on their individual values but based on the context or behavior of the data.

Example: In a dataset tracking the daily mileage driven by a fleet of cars, if one particular car typically drives around 50 miles per day, but on one day it drives 500 miles, that would be considered a contextual outlier for that specific car, even though driving 500 miles in a day may not be unusual for some other cars in the fleet.

What are Drifts?

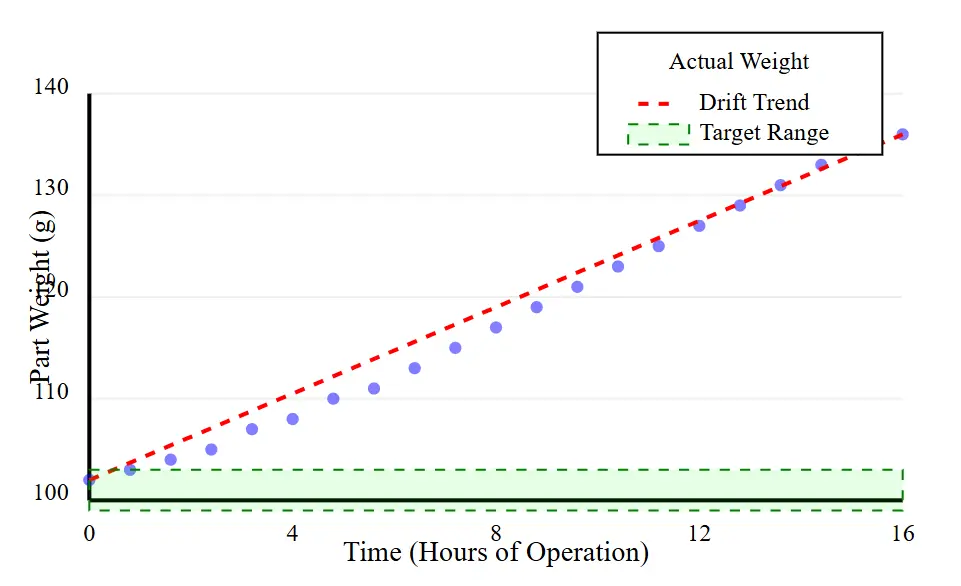

Drifts refer to changes in the data distribution over time.

For instance, if a robotic arm is assembling parts and the average weight of the parts gradually increases over time due to a change in manufacturing processes, this would be considered a drift.

To understand these concepts better, let’s consider a few more examples.

Drifts example:

- In an online sales dataset, a drift might be a gradual increase in the average order value over time due to changes in consumer behavior or pricing strategies.

- In an air quality dataset, a drift might be a gradual decrease in air quality over time due to changes in industrial activity or weather patterns.

- In a dataset of financial transaction data, a drift might be a gradual increase in the number of fraudulent transactions over time due to changes in the tactics used by fraudsters.

Types of drifts

Concept drift

This type of drift occurs when the fundamental concept or idea that the AI model is trying to learn undergoes a shift over time. The relationships between the input features and the target variable evolve, requiring the model to adapt to the new conceptual understanding.

Example: A car resale value prediction model experiences drift as the concept of “resale value” evolves to include new factors like safety ratings and fuel efficiency over time.

Real drift

Real drift happens when there is a change in the real-world phenomena or environment that the AI model is designed to operate in. This leads to a shift in the statistical properties of the input data, requiring the model to account for the new real-world conditions.

Example: For example, in a car resale value prediction model, real drift could occur if there is a sudden surge in demand for electric vehicles due to government incentives. This change in market preferences would alter the relationship between the car type and its resale value, requiring the model to adjust to these new real-world conditions.

Virtual drift

In this case, the drift is not caused by changes in real-world conditions but rather by factors related to the data acquisition, processing, or presentation methods. Despite no fundamental shift in the underlying phenomena, how the data is captured or represented undergoes alterations.

Example: An update to a car’s camera system, altering image processing algorithms or color calibration, causes a drift in the input data distribution despite no change in the captured scenes.

Data drift

Data drift refers to a change in the statistical distribution of the input features over time while the relationship between the features and the target variable remains relatively stable. This could be caused by factors like sensor degradation, changes in data sources, or evolving patterns in the input data.

Example: A model estimating a car’s fuel efficiency experiences data drift as drivers’ habits change over time, leading to different distributions of input features like speed, acceleration, and braking patterns

Model Drift

This type of drift occurs when the performance of the AI model itself deteriorates over time, even though there may be no significant changes in the input data distribution or the real-world conditions. It could be caused by issues with model maintenance, deployment, or other factors affecting the model’s behavior.

Example: An AI system for detecting pedestrians around a car, which initially performed well, experiences a gradual decline in accuracy over time, even without significant changes in the input data or real-world conditions.

Tools to detect outliers and drifts

Detecting and handling outliers and drifts in AI models is crucial for maintaining accuracy. For example, if the AI model is not designed to handle outliers, it may produce inaccurate predictions when it encounters them. Similarly, if the AI model is not designed to handle drifts, it may become less accurate over time as the data distribution changes. These issues can be detected at various stages, such as data preparation, live inputs, or model predictions.

The following are some of the open source and commercial tools that enable the detection of outliers and drifts:

- Python Outlier Detection (PyOD): A comprehensive library for detecting outliers using a variety of machine learning algorithms.

- Alibi Detect: A library focused on outlier, drift, and adversarial detection to monitor machine learning models in production.

- Evidently AI: Provides visual reports and monitoring tools to detect data and model drifts, ensuring continuous model performance.

- Great Expectations: A data validation tool that helps ensure data quality by defining, testing, and documenting expectations for datasets.

What are model predictions?

Machine learning models are increasingly being integrated into various industries and applications. However, despite their growing use, these models often operate as ‘black boxes,’ meaning they don’t reveal the underlying logic behind their decisions, making it difficult to understand or explain their outputs. This opacity is concerning for several reasons. Firstly, understanding why a model makes a certain prediction is fundamental to building trust, which is crucial for relying on the model’s outputs in decision-making processes. Additionally, a comprehensive grasp of how a model functions and the variables that affect its predictions is essential when evaluating whether to implement a new model.

Moreover, clarity about how machine learning models operate isn’t just about trust; it also offers valuable insights that can enhance model performance. By analyzing the elements that lead to unreliable predictions, we can pinpoint areas needing improvement and take action to make models more reliable. So, let’s understand the different types of explanations, algorithms, methods & tools that help in describing how a model prediction works.

Type of model explanations

Model explanations can be broadly classified into two major categories, i.e., local and global.

Global explanations

Global explanations provide an understanding of how a model behaves generally, offering insights into the overall behavior of the model and the importance of different features in making predictions across all instances. They focus on explaining the model’s decisions based on a holistic view of its features and learned components, such as weights and structures. Global explanations are beneficial for gaining a high-level understanding of the model’s behavior and feature interactions, helping to comprehend the distribution of the target outcome based on the input features. Global explanations reveal that features like the presence of keywords (“win,” “free”) and the frequency of links are significant indicators of spam across all emails. This helps in understanding overall model behavior and improve spam detection strategies.

Local explanations

Local explanations focus on explaining a single model output or prediction, providing insights into why a specific prediction was made for an individual instance. They offer more detailed and specific insights by highlighting the contribution of features to a particular prediction, allowing for a deeper understanding of the model’s decision-making process at an individual level. Local explanations can be more accurate than global explanations in certain contexts, as they provide tailored insights for specific instances, making them indispensable for understanding the root causes of particular predictions in production scenarios. Local explanations for a specific email classified as spam might highlight that it contains the word “lottery” multiple times and links to suspicious domains. This provides clarity on why that particular email was flagged, aiding in the verification of the classification.

Explanation algorithms

There are multiple algorithms for explaining machine learning models because there is no one-size-fits-all solution for understanding the decision-making process of these models. Different algorithms have different strengths and weaknesses and may be better suited for certain types of models, data, or use cases. For example, some algorithms may be more effective for explaining linear models, while others may be better for explaining complex neural networks. The choice of algorithm depends on the specific needs and goals of the user, as well as the complexity and transparency of the model being explained.

Some of the most popular model explanation algorithms are:

- SHAP (shapley Additive explanations): It distributes feature importance based on cooperative game theory.

- LIME (Local Interpretable Model-Agnostic Explanations): Explains individual predictions with locally approximated models.

- Anchors: Uses rule-based conditions to explain predictions with high precision.

- Accumulated Local Effects: Measures average feature impact while accounting for feature correlations.

Model explanation methods & tools

Explanation methods can be broadly classified into two categories i.e., white-box and black-box

What is the white box approach?

The white box approach involves understanding the internal workings of a system, including its algorithms, data structures, and implementation details. In the context of AI models, white box explanations provide insights into the model’s architecture, weights, and decision-making processes.

What is the black box approach?

The black box approach focuses on the system’s inputs and outputs without considering its internal mechanisms. In AI models, black box explanations involve analyzing the model’s predictions, errors, and overall behavior without delving into the underlying algorithms and weights.

Enabling explanation for your model deployments

To enable explanation in your model deployments, you need to first decide on the model explanation method you will use, i.e., white box or black box, and after that, based on your use case, select the algorithm or methods best to your needs. No, when it comes to tools, there are certain open-source libraries that help us enable model explanations.

- Alibi Explain: Alibi is a Python library for machine learning model inspection and interpretation. Alibi caters to both black-box and white-box model inspection, as well as local and global explanation techniques for classification and regression models. If you are using Seldon for your model deployment, integration becomes quite straightforward.

- DALEX: The DALEX package x-rays any model and helps explore and explain its behavior, helping to understand how complex models work.

- ELI5: ELI5 is a Python package designed to simplify the debugging of machine learning classifiers and make their predictions easy and intuitive to understand. It lacks true model-agnostic capabilities, and its support is largely confined to tree-based and other parametric/linear models.

What is continual learning?

Continual learning is a technique that allows AI models to continuously learn from new data as it becomes available rather than being trained once on a fixed dataset. This approach is particularly important in real-world applications where data is constantly evolving, such as fraud detection models that need to adapt to new fraud patterns quickly. Continual learning differs from traditional learning methods, which involve training models on the latest available data and discarding them when their performance declines. Instead, continual learning enables models to learn from new data while retaining the knowledge they have already acquired without extensive retraining. This allows models to stay up-to-date with changing data distributions and maintain high accuracy over time. Unlike reinforcement learning, which learns through trial-and-error rewards, continual learning incrementally updates models from evolving data streams. This allows continuous model monitoring by enabling real-time adaptation to data shifts without retraining, ensuring consistent accuracy over time.

A significant challenge in today’s machine learning production is the ongoing issue of the cold start problem, which continual learning can help address. The cold start problem emerges when a model must make predictions for a new user without any prior historical data. For instance, in a movie recommendation system, the system typically requires knowledge of a user’s watch history to recommend movies they might enjoy. However, if the user is new and lacks a watch history, the system must provide generic recommendations, such as the most popular movies on the site. Continual learning can help overcome this challenge by enabling models to learn from new users and adapt to their preferences over time without requiring extensive historical data.

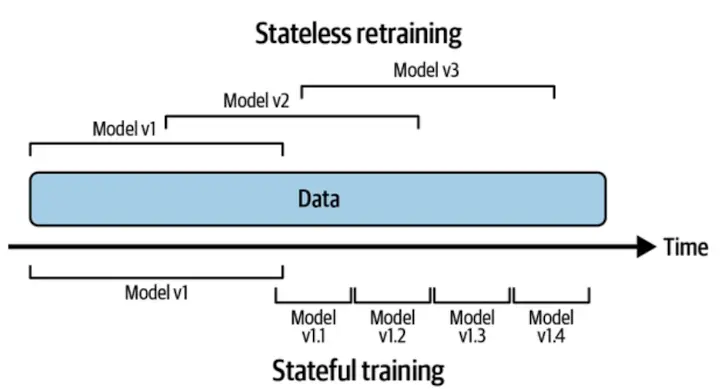

Difference between stateless retraining and stateful training

Stateless retraining refers to periodically retraining an AI model from scratch using the latest available data without considering the previous state or weights of the model. This approach can be beneficial when dealing with significant data or concept drifts, as it allows the model to learn the new patterns or distributions without being influenced by the previous training.

Stateful retraining involves incrementally updating an existing model with new data while retaining and building upon the previous model’s weights and knowledge. This approach can be more efficient and faster, particularly when the data or concept changes are relatively small or gradual. However, it also carries the risk of compounding errors or biases over time if the model is not properly updated or monitored.

How to implement continual learning?

Continual learning revolves around establishing a robust infrastructure that enables you to promptly update your models and swiftly deploy these modifications whenever necessary. Businesses that implement continual learning in their production processes update their models in small, incremental batches. For example, they might refresh the current model after every 512 or 1,024 data points, with the optimal number of data points in each batch depending on the specific task. Before deploying the updated model, it should undergo evaluation to ensure its superiority. This means that changes should not be made directly to the existing model. Instead, a copy of the current model should be created and updated with new data, and only if the updated copy outperforms the current model should it replace the existing one. The existing model is often referred to as the champion model, while the updated copy is called the challenger.

To evaluate your model in production safely, you can leverage some of the advanced release strategies that allow you to test every new release of the model initially with a limited scope and then you can open it up for all your customer base. Some of the popular release strategies include:

- Shadow Deployment

- A/B Testing

- Canary Releases

AI ML Tools for monitoring

Here are several AI and ML tools that our AI engineers uses to monitor complex AI systems:

- MLflow: Manages machine learning workflows, including experiment tracking and model deployment.

- Prometheus: Monitors and stores time-series data for tracking ML metrics and performance.

- Deepchecks: Validates ML models, ensuring data quality and performance.

- Neptune.ai: Tracks and visualizes machine learning experiments and models.

- Datadog: Monitors cloud environments and ML workloads for performance and health.

- Dynatrace: Provides observability for applications and ML systems, detecting performance issues.

- Grafana and Prometheus: These are used together to monitor and visualize ML system metrics.

- Last 9: Cloud Native monitoring with metrics, events, logs, and traces.

Final words

The machine learning lifecycle doesn’t stop once a model is deployed. Keeping an eye on deployed models is essential to ensure the ongoing delivery of high-quality services powered by machine learning. If your model fails to adapt swiftly, it may not provide relevant predictions to users until it is updated again. By that point, users seeking relevant content may have already abandoned the service. In this blog post, we’ve delved into key concepts such as monitoring, explainability, and continual learning for AI/ML models that will help you measure the correctness and relevance of the model at the current time. Grasping these ideas will assist you in selecting appropriate metrics for your model to monitor and develop more contextual dashboards.

Now that you have learned about the importance of AI monitoring and its different components, you may like to implement it within your AI systems. At the production level, implementing observability could be complex, and various metrics end up showing false errors. You could also end up tracking the wrong kind of information. AI & GPU Cloud experts and observability service providers like InfraCloud can help you build your own AI cloud and add monitoring to visualize the critical information.

If you found this post valuable and informative, subscribe to our weekly newsletter for more posts like this. I’d love to hear your thoughts on this post, so do start a conversation on LinkedIn.

More AI & ML resources

- What is Inference Parallelism and How it Works

- Retrieval-Augmented Generation: Using your Data with LLMs

- Exploring AI Model Inference: Servers, Frameworks, and Optimization Strategies

- What are Vector Databases? A Beginner’s Guide

- Introduction to NVIDIA Network Operator

- Primer on Distributed Parallel Processing with Ray using KubeRay

- Model Context Protocol: The USB-C for AI: Simplifying LLM Integration

References

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.