EdgeX Foundry on K3s - the Inception

This blog post is part 1 of a series of articles about how to deploy and operate EdgeX Foundry - an open source software framework for IoT Edge on K3s - a lightweight, highly available, and secured orchestrator.

See other posts:

Why emphasize on the Edge?

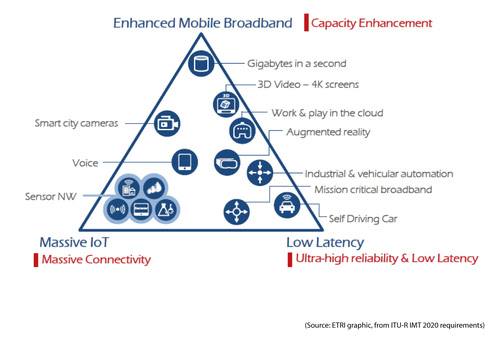

As we start using the edge computing in its true sense, I think we are approaching the edge of the technological renaissance. The Edge is becoming an essential element of all the upcoming and futuristic technologies. Some of the popular examples would be as shown in Fig. 1:

- Edge is a complementary solution to 5G.

- Edge is the backbone for IoT and Fog.

- Edge is required for AI and ML workloads to enable real-time data processing.

- Automated vehicles leverage the Edge for mission-critical latency and high reliability.

- VR applications needs the Edge for stringent requirements of latency, network and reliability.

- Edge will conserve broadband networks while streaming of global events.

- Software upgrades can use the Edge to minimize network pressure on backhaul.

The major issues which are common in all the above areas are minimum latency requirement and high network availability. These two issues are exacerbated in the case of traditional cloud-based architectures as it is a centralized architecture. Edge plays an important role in alleviating the two pressure points by distributing data processing, thus being an essential part of all the technologies.

Where to look for while exploring Edge?

Edge is primarily an application or use case driven strategy. Thus it’s implementation changes according to the use case and the underlying issues (e.g latency, network bandwidth, scalability, security, etc.). This is also true for the adoption of 5G. Industries are formulating new tools and technologies to adopt 5G in various areas such as IoT, IIoT, entertainment, and more. This is the correct stage for choosing the correct path for implementation and formulating the standards that will help to onboard future technologies.

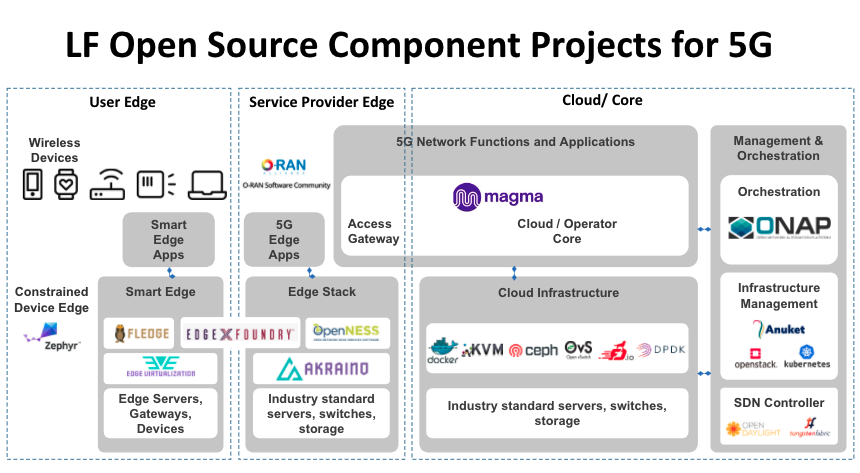

Linux Foundation initiated two open communities namely, LF Networking and LF Edge. These communities provide an ecosystem for network infrastructure and services as well as an interoperable framework for the Edge computing respectively. Furthermore, LF Networking integrates with LF Edge to provide an open source Edge framework and seamless Edge networking. But out of these one thing that caught my attention and triggered me to explore this further was the 5G Super Blueprint initiative.

As we have learned so far, all the upcoming technologies desperately need low-latency, high-bandwidth, and scalable networks. To address all these issues, LF Networking announced 5G Super Blueprint (see Fig. 2), a community-driven integration/illustration of multiple open source initiatives coming together to show end-to-end use cases demonstrating implementation architectures for end users.

User Edge (UE)

As you can see 5G Super Blueprint is mainly divided into three sections. The first section named User Edge is also considered as the last mile network. It deals with the applications which are closer to end-users. It uses on-prem and distributed compute resources to reduce latency. It also lowers the pressure on the broadband networks by minimizing the unnecessary backhaul communication to the data centers. In addition to it, we also achieve autonomy, increased security and privacy, and a reduction in overall cost. The business model that applications use in UE is generally based on CAPEX as infrastructure and its operation is handled by the user rather than delivered as managed service.

Service Provider Edge (SPE)

In contrast to UE, SPE is distributed yet a shared space and is primarily consumed as a service. It is considered to be more secured and private as compared to cloud as it uses private networks (both wired and wireless/cellular) operated by service providers. It is more standardized than UE, but it also has unique requirements according to the use case and location.

5G Core

The core of 5G Blueprint consists of tools that provide open cloud native 5G network functions. Cloud infrastructure that adheres to the 5G principles and is able to provision these 5G functions (e.g. network accelerators, vector packet processors, etc.). It also consists of a management plane that offers to orchestrate, automate, and manage the lifecycle of network functions.

Is Edge native similar to cloud native?

No, there is a slight difference. Edge native applications leverage cloud native principles while taking into account the unique characteristics of the Edge in areas such as resource constraints, security, latency, and autonomy. Edge native applications are developed in ways that leverage the cloud and work in concert with upstream resources. Edge applications that don’t comprehend centralized cloud compute resources, remote management, and orchestration or leverage CI/CD aren’t truly “edge native”, rather they more closely resemble traditional on-premises applications.

Why EdgeX Foundry?

Covers both UE and SPE in 5G Super Blueprint

The first reason why I started my exploration in the Edge with EdgeX Foundry is that it was an overlapping project in UE and SPE space. It means that you can use it in UE or in SPE or a combination of both. To understand how it is possible, let’s dive into its architecture.

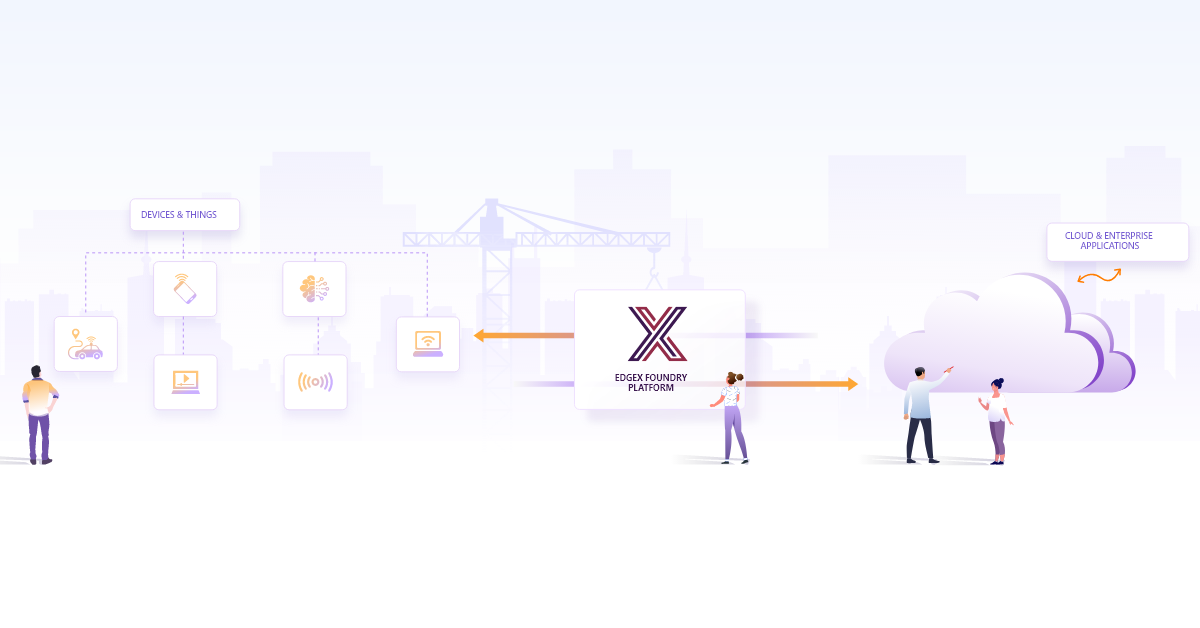

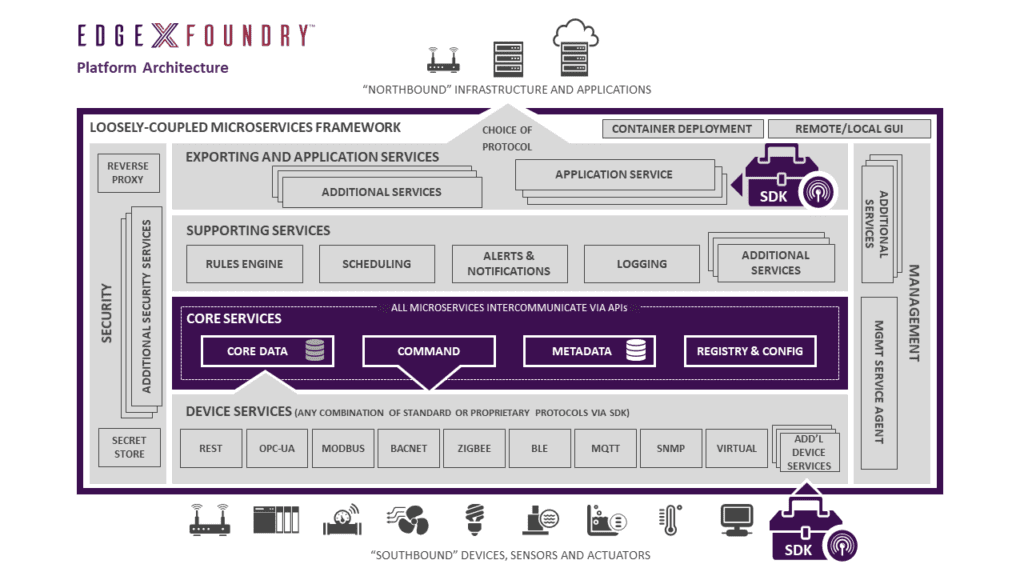

As you can see in Fig. 3, EdgeX Foundry is primarily divided into 4 layers which are briefly described as follows:

- Device Services: Responsible for interacting with the Edge devices and connecting with the other services.

- Core Services: It is mainly responsible for handling the device information and data processing. It consists of following services:

-

Core data: a persistence repository and associated management service for data collected from south side objects.

-

Command: a service that facilitates and controls actuation requests from the north side to the south side.

- Metadata: a repository and associated management service of metadata about the objects that are connected to EdgeX Foundry. Metadata provides the capability to provision new devices and pair them with their owning device services.

- Registry and Configuration: provides other EdgeX Foundry micro services with information about associated services within EdgeX Foundry and micro services configuration properties (i.e. - a repository of initialization values).

-

- Supporting services (Optional): The supporting services encompass a wide range of micro services to include the Edge analytics (also known as local analytics). They are mainly responsible for logging, scheduling, and data clean up (also known as scrubbing in EdgeX).

-

Rules Engine: the reference implementation of Edge analytics service that performs if-then conditional actuation at the Edge, based on sensor data collected by the EdgeX instance.

-

Scheduling: an internal EdgeX “clock” that can kick off operations in any EdgeX service.

-

Logging: provides a central logging facility for all of EdgeX services. Services send log entries into the logging facility via a REST API where log entries can be persisted in a database or log file.

-

Alerts and Notifications: provides EdgeX services with a central facility to send out an alert or notification.

-

- Application services: Application services are the means to extract, process/transform and send sensed data from EdgeX to an endpoint or process of your choice. They also send data to many of the major cloud providers (Amazon IoT Hub, Google IoT Core, Azure IoT Hub, IBM Watson IoT…), to MQTT(s) topics, and HTTP(s) REST endpoints.

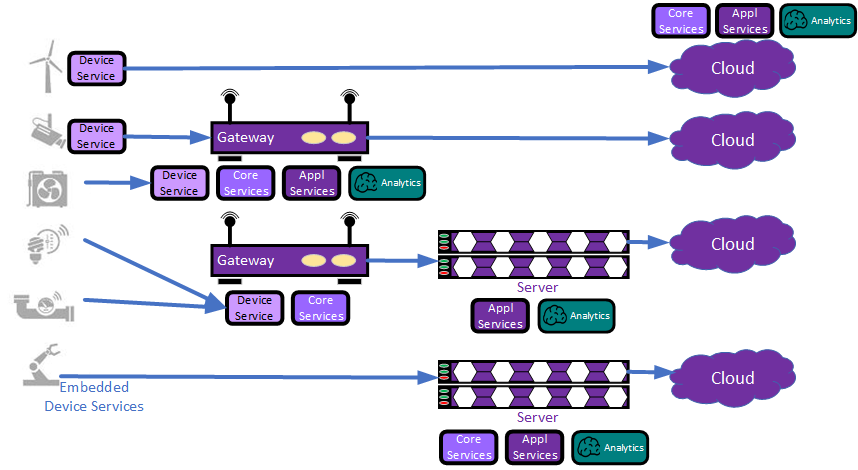

The placement of these services defines whether the EdgeX Foundry implementation is either on UE or SPE. The following diagram (see Fig.4) describes this placement in detail.

The loosely coupled architecture and the microservices design enable the deployment of its services in various combinations.

Graduated to Impact Stage in LF Edge projects

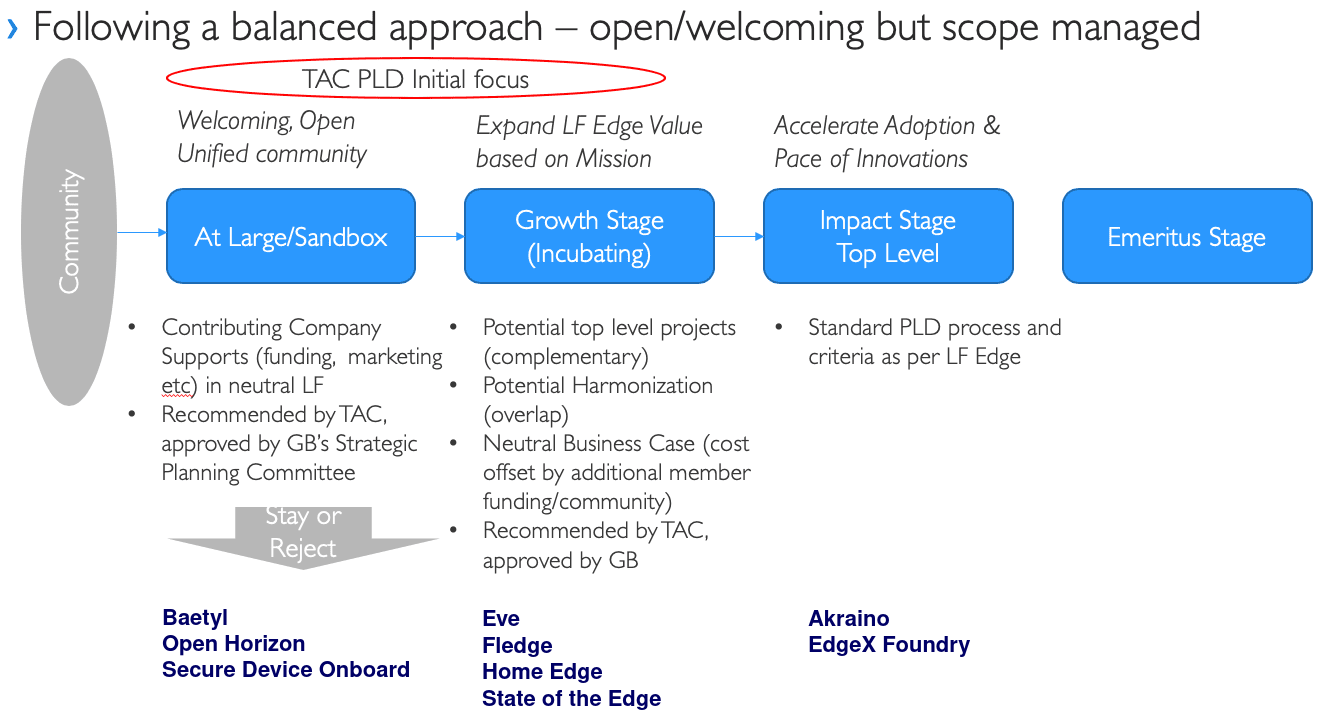

Coming back to the reason starting with EdgeX Foundry, it is currently in Stage 3 of the LF Edge’s Project Lifecycle Document (PLD) process. All new projects enter as:

- Stage 1 “At Large” are the projects which the TAC believes are, or have the potential to be, important to the ecosystem of Top-Level Projects, or the Edge ecosystem as a whole.

- The second “Growth Stage” is for projects that are interested in reaching the Impact Stage, and have identified a growth plan for doing so.

- Finally, the third “Impact Stage” is for projects that have reached their growth goals and are now on a self-sustaining cycle of development, maintenance, and long-term support.

Why K3s?

While working in Edge, you require a lightweight, resource-constraint, and highly available orchestrator to manage Edge native applications. There are many options available like minikube, kind, Kubernetes, K3s, and MicroK8s. Although minikube and kind are popular tools for hands-on or demo purposes, they are not production-compliant. Kubernetes is a good option for the orchestration of the Edge native microservices. K3s and MicroK8s are lightweight variants of Kubernetes which are more suitable for the Edge scenarios. Both of them can be deployed on small devices like Raspberry-Pis and also on AWS instances. K3s is Linux distribution independent and follows the multi-node architecture. Due to these reasons, we are focusing on K3s for deploying EdgeX Foundry.

Conclusion

In this post, we have seen:

- How the Edge is necessary for upcoming technologies?

- How Linux Foundation is contributing to Open Source Edge and Networking?

- What is EdgeX Foundry?

- How K3s is complementary for the Edge?

I hope you found this post informative and engaging. Stay tuned for the part 2 of this post where we explore how to deploy EdgeX Foundry on K3s and how to send sensor data from Raspberry Pi to EdgeX Foundry.

For more posts like this one, do subscribe to our weekly newsletter. I’d love to hear your thoughts on this post, so do start a conversation on Twitter or LinkedIn :).

Looking for help with your cloud native journey? do check out how we’re helping startups & enterprises with our cloud native consulting services and capabilities to achieve the cloud native transformation.

References

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.