How do you Integrate Emissary Ingress with OPA

API gateways play a vital role while exposing microservices. They are an additional hop in the network that the incoming request must go through in order to communicate with the services. An API gateway does routing, composition, protocol translation, and user policy enforcement after it receives a request from client and then reverse proxies it to the appropriate underlying API. As the API gateways are capable of doing the above-mentioned tasks, they can be also configured to send the incoming client requests to an external third-party authorization (authz) server. The fate of the incoming request then depends upon the response from this external authz server to the gateway. This is exactly where Open Policy Agent (OPA) comes into the picture.

There are many open source Kubernetes native API gateways out there like Contour, Kong Gateway, Traefik, Gloo, etc.. In this article, we will be exploring the Emissary Ingress.

Let’s dive deep and start understanding more bit about Emissary Ingress.

What’s Emissary Ingress?

Emissary Ingress was earlier known as Ambassador API gateway, it is an open source Kubernetes native API gateway and is currently a CNCF Incubation Project. Like many other Kubernetes gateways, Emissary has also been built to work with Envoy Proxy. It is deployed as complete stateless architecture and supports multiple plugins such as traditional SSO authentication protocols (e.g.: OAuth, OpenID Connect), rate limiting, logging, and tracing service. Emissary utilizes its ExtAuth protocol in AuthService resource to configure the authentication and authorization for incoming requests. ExtAuth supports two protocols: gRPC and plain HTTP. For gRPC interface, the external service must implement Envoy’s external_auth.proto.

OPA

Open Policy Agent is a well-known general-purpose policy engine and has emerged as a policy enforcer across the stacks be it API gateways, service meshes, Kubernetes, microservice, CICD, or IAC. OPA decouples decision making from policy enforcement such that whenever your software needs to make a decision regarding the incoming requests, it queries OPA. OPA-Envoy extends OPA with a gRPC server that implements the Envoy External Authorization API, thus making itself compatible to be as an external authz server to Emissary.

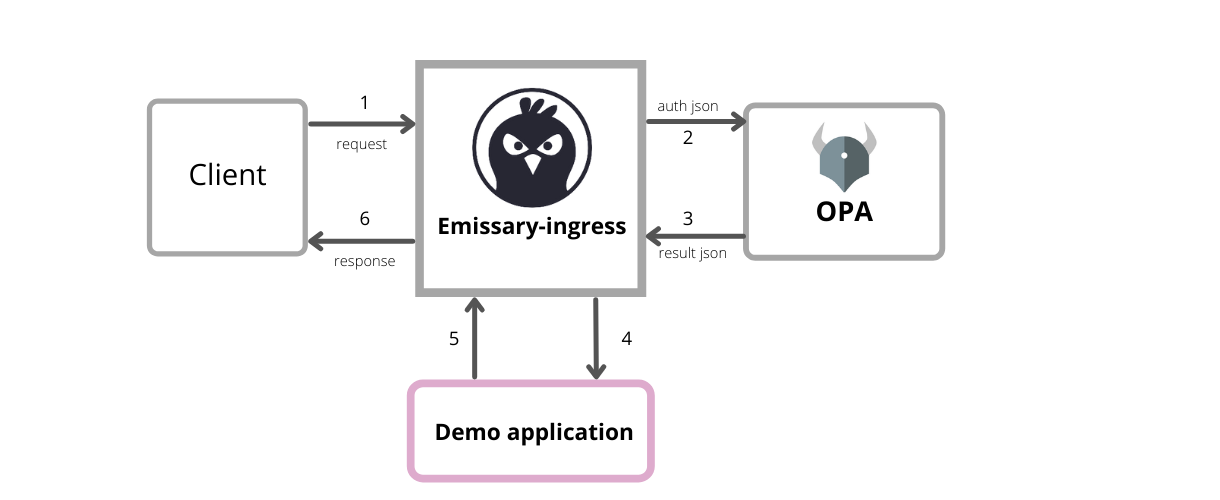

Integrating Emissary Ingress with OPA

The above figure shows highlevel architecture of Emissary and OPA integration. When incoming request from client reaches Emissary, it sends an authorization request to OPA which contains input JSON. OPA evaluates this JSON against the Rego policies provided to it and responds to Emissary, if this result JSON from OPA has allow as true then only the client request is further routed to API or else the request is denied by Emissary and never reaches the API. Now, we will be installing Emissary Ingress and integrate it with OPA for external authorization.

Getting Started

First, we will be needing to start a Minikube cluster. If you don’t have Minikube, you can install it from here.

minikube start

Install the Emissary Ingress to the minikube through Helm.

# Add the Repo:

helm repo add datawire https://app.getambassador.io

helm repo update

# Create Namespace and Install:

kubectl create namespace emissary && \

kubectl apply -f https://app.getambassador.io/yaml/emissary/2.2.2/emissary-crds.yaml

kubectl wait --timeout=90s --for=condition=available deployment emissary-apiext -n emissary-system

helm install emissary-ingress --namespace emissary datawire/emissary-ingress && \

kubectl -n emissary wait --for condition=available --timeout=90s deploy -lapp.kubernetes.io/instance=emissary-ingress

Or go to Emissary Ingress Documentation to install it through Kubernetes YAMLs.

Configuring the routing for demo application

Different gateways have their own set of configurations for exposing a service. In Emissary, we need to configure the routing through Mappings and Listeners.

Mapping resource simply tells Emissary which service to redirect the incoming request to. It is highly configurable like Ingress. You can learn more about Mapping resource on Introduction to the Mapping resource page. We will create a simple Mapping resource which will redirect all the incoming requests to our demo application’s service that is demo-svc.

cat <<EOF | kubectl apply -f -

apiVersion: getambassador.io/v3alpha1

kind: Mapping

metadata:

name: demo-app-mapping

spec:

hostname: "*"

prefix: /

service: demo-svc

EOF

The Listener resource instructs Emissary where to listen on the network for the incoming request. Here we will create a listener to listen on port 8080 and HTTP protocol and associates with hosts in All namespace . For detailed info visit Listener Docs.

cat <<EOF | kubectl apply -f -

apiVersion: getambassador.io/v3alpha1

kind: Listener

metadata:

name: demo-app-listener-8080

namespace: emissary

spec:

port: 8080

protocol: HTTP

securityModel: XFP

hostBinding:

namespace:

from: ALL

EOF

Install the Demo Application

Install a simple echo server as a demo application.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-app

spec:

replicas: 1

selector:

matchLabels:

app: demo-app

template:

metadata:

labels:

app: demo-app

spec:

containers:

- name: http-svc

image: gcr.io/google_containers/echoserver:1.8

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: demo-svc

labels:

app: demo-app

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app: demo-app

EOF

Communicate with the demo app at different paths.

minikube service emissary-ingress -n emissary

Note: The above exposing method may not work for macOS users. They can use busybox and configure it to hit the emissary local endpoint instead.

Copy the private URL with target port 80. The URL must be IP 192.168.49.2 followed by a NodePort like http://192.168.49.2:30329. Export the NodePort value to $NODEPORT environment variable and curl to that at paths as follows:

curl http://192.168.49.2:$NODEPORT/public

and

curl http://192.168.49.2:$NODEPORT/secured

OPA has not yet been added to the setup and the above curl requests are directly sent to API without any policy enforcement.

How to Install and Configure OPA?

OPA will be reading the policies fed to it via a configmap. Create the following configmap which contains a policy that allows all incoming requests only through GET method.

cat <<EOF | kubectl apply -n emissary -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: demo-policy

data:

policy.rego: |-

package envoy.authz

default allow = false

allow {

input.attributes.request.http.method == "GET"

}

EOF

OPA can be configured as an external authorization server via deploying it as an independent deployment or as a sidecar to the emissary-ingress. Here we will add it as a sidecar. Save the following YAML as opa-patch.yaml.

spec:

template:

spec:

containers:

- name: opa

image: openpolicyagent/opa:latest-envoy

ports:

- containerPort: 9191

args:

- "run"

- "--server"

- "--addr=0.0.0.0:8181"

- "--set=plugins.envoy_ext_authz_grpc.addr=0.0.0.0:9191"

- "--set=plugins.envoy_ext_authz_grpc.query=data.envoy.authz.allow"

- "--set=decision_logs.console=true"

- "--ignore=.*"

- "/policy/policy.rego"

volumeMounts:

- mountPath: /policy

name: demo-policy

readOnly: true

volumes:

- name: demo-policy

configMap:

name: demo-policy

patch the emissary-ingress deployment and wait for the all the emissary-ingress pods to restart.

kubectl patch deployment emissary-ingress -n emissary --patch-file opa-patch.yaml

Wait until all the emissary-ingress pods come to Running state with OPA sidecar.

Create the following AuthService. AuthService is a resource which configures Emissary to communicate with an external service for Authn and Authz of incoming request. We are configuring it communicate with OPA on localhost since OPA is deployed as a sidecar.

cat <<EOF | kubectl apply -f -

apiVersion: getambassador.io/v3alpha1

kind: AuthService

metadata:

name: opa-ext-authservice

namespace: emissary

labels:

product: aes

app: opa-ext-auth

spec:

proto: grpc

auth_service: localhost:9191

timeout_ms: 5000

tls: "false"

allow_request_body: true

protocol_version: v2

include_body:

max_bytes: 8192

allow_partial: true

status_on_error:

code: 504

failure_mode_allow: false

EOF

Try doing curl now, since the policy accepts requests coming through GET method and there are no restrictions on path, both the request will get a 200 OK response.

curl -i http://192.168.49.2:$NODEPORT/public

curl -i http://192.168.49.2:$NODEPORT/private

Now lets edit the policy to accept incoming requests at path /public only and request to any other path will be denied.

cat <<EOF | kubectl apply -n emissary -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: demo-policy

data:

policy.rego: |-

package envoy.authz

default allow = false

allow {

input.attributes.request.http.method == "GET"

input.attributes.request.http.path == "/public"

}

EOF

Now restart the emissary ingress deployment for policy changes to take effect.

kubectl rollout restart deployment emissary-ingress -n emissary

Wait until all the emissary-ingress pods come to Running state after restart.

Now do a curl request at path /public, it will be accepted but at path /private it will be denied by OPA with a 403 response and hence the request will not reach the demo API.

curl -i http://192.168.49.2:$NODEPORT/public

curl -i http://192.168.49.2:$NODEPORT/private

Conclusion

The decision-making about the incoming request from the client to exposed API can be decoupled to OPA as an external authorization server in the Emissary Ingress setup. OPA can be added as a plug-and-play policy enforcer to Emissary and any other gateways supporting the Envoy External Authorization API.

We hope you found this post informative and engaging. Connect with us over Twitter and Linkedin and start a conversation.

References and further reading

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.