How to Enable End-to-End Encryption in AWS

Akash Warkhade

Akash Warkhade  Amit Kulkarni

Amit Kulkarni  Aashi Modi

Aashi Modi  Sayed Belal

Sayed Belal  Sumedh Gondane

Sumedh Gondane Securing applications has become more important than ever. With increased cyber threats and attacks, businesses today are focused on securing their applications and data. With complex distributed cloud native applications with a large footprint, it’s even more critical. AWS being one of the leading industry choices to deploy applications offers a range of services that ensure that your applications are safe, resilient, and available.

There are many ways to secure your applications and data right from using suitable authorization and authentication mechanisms to utilizing encryption, which is one of the effective ways to safeguard your sensitive data.

Let us understand how a financial company can leverage AWS services & utilize encryption techniques to safeguard their data. They can set up a data pipeline using AWS Firehose, SQS, S3, and SNS encryption to ensure that data is encrypted at every stage of the process. Firehose delivers the data to S3, SQS buffers the data, S3 stores the data with encryption, and SNS notifies stakeholders of new data with encrypted messages.

This multi-layered approach to data security ensures that sensitive data is protected and only accessible by authorized personnel. In this blog post, we will see how to enable End to End Encryption for AWS services like AWS Firehose, SQS, S3, RDS, and SNS Encryption.

Encryption in AWS services

AWS offers more than 200 services from compute to storage, database, and network. It provides a comprehensive security solution for all its services. There is a range of encryption-based services including AWS Key Management Service which allows the management of encrypted keys, and Identity and Access Management (IAM) to manage access across AWS services.

We’ll take a closer look at enabling encryption in-transit, at-rest encryption & server-side encryption for AWS services like Firehose, SQS, S3, RDS, and SNS in this post.

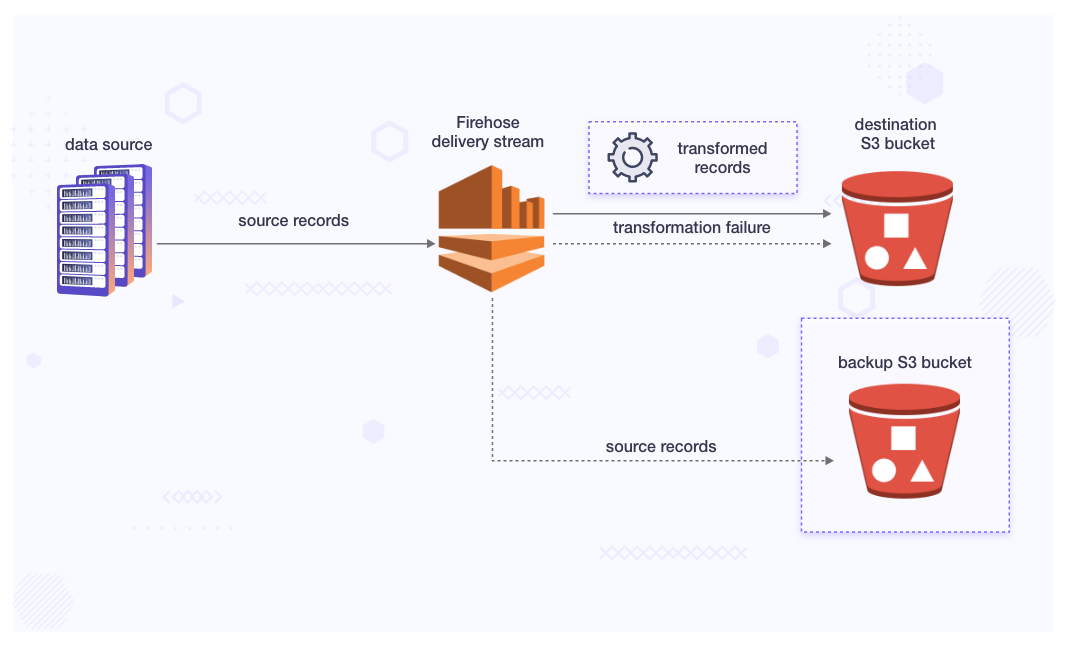

Encryption in AWS Firehose

Amazon Kinesis Firehose is a fully-managed service that enables the processing and delivery of real-time streaming data to destinations such as Amazon S3, Amazon Redshift, Amazon Elasticsearch Service, and similar other tools and services. Firehose data pipeline can automatically scale based on the throughput of the incoming data and also provide automatic error handling.

-

Server-Side Encryption with Kinesis Data Streams as the Data Source

When we configure a Kinesis data stream as the data source of a Kinesis Data Firehose delivery stream, Kinesis Data Firehose no longer stores the data at-rest. Instead, the data is stored exclusively within the data stream, bypassing any storage.

When we send data from our data sources to Kinesis Data Streams, it keeps the data secure by encrypting it using a special key from AWS Key Management Service before storing it. Later, when we retrieve the data from the data stream, Kinesis Data Stream first decrypts the data and then sends it to Firehose.

Firehose temporarily holds the data in its memory, based on the instructions we provide. Then, without storing the data in its unencrypted form, it delivers the data to the specified destinations. This ensures that our data remains protected throughout the process, both when stored and when sent to its final destinations.

-

Server-Side Encryption with Direct PUT or Other Data Sources:

If you choose to send data to your delivery stream through methods like PutRecord or PutRecordBatch, or if you utilize AWS IoT, Amazon CloudWatch Logs, or CloudWatch, you have the option to enable server-side encryption. This can be done by utilizing the StartDeliveryStreamEncryption operation.

To stop server-side-encryption, use the StopDeliveryStreamEncryption operation. Read more about the StartDeliveryStreamEncryption and StopDeliveryStreamEncryption.

In the scenario where the Customer Master Key (CMK) is of type CUSTOMER_MANAGED_CMK, if the Amazon Kinesis Data Firehose service encounters difficulties decrypting records due to issues like KMSNotFoundException, KMSInvalidStateException, KMSDisabledException, or KMSAccessDeniedException, it allows a grace period of up to 24 hours (retention period) for you to resolve the problem. If the issue persists beyond this retention period, the service skips and discards the records that couldn’t be decrypted. To assist in monitoring these AWS Key Management Service (KMS) exceptions, Amazon Kinesis Data Firehose provides the following four CloudWatch metrics:

- KMSKeyAccessDenied

- KMSKeyDisabled

- KMSKeyInvalidState

- KMSKeyNotFound

Challenges while encrypting the Firehose

While encrypting the Firehose, the data stored in the S3 bucket also need to be encrypted with proper roles and permissions. If proper permissions are not applied then the data might not be accessible from the S3 bucket or can not be stored in S3.

Data processed from Firehose goes to the S3 bucket through an SQS queue. Next, we will cover how we can secure this passage by encrypting it.

Encryption in SQS

Simple Queue Service (SQS) is a fully-managed message queuing service that enables the decoupling and scaling of distributed systems by providing a reliable and highly scalable message queuing service. It allows messages to be transmitted between distributed application components and microservices. It also provides a simple API that enables developers to send, receive, and process messages without needing to worry about the underlying infrastructure. We can use SQS to safely exchange messages between different software components.

In-transit encryption

In-transit encryption is enabled by default in the SQS service.

Server-side encryption (SSE)

Server-side encryption lets us transmit sensitive data in the encrypted queue. It protects the contents of the messages in the queues using the encryption keys.

Approaches available for encryption:

- Encryption using SQS-owned encryption keys.

- Encryption using keys managed in the AWS Key Management Service (KMS).

Encryption using SQS-owned encryption keys

Amazon SQS managed SSE (SSE-SQS) is managed server-side encryption that uses SQS-owned encryption keys to protect sensitive data sent over message queues. SSE-SQS eliminates the need for manual creation and management of encryption keys or code modifications to ensure data encryption. It enables secure data transmission, ensuring compliance with encryption regulations and requirements without incurring any additional costs.

SSE-SQS protects data at-rest using 256-bit Advanced Encryption Standard (AES-256) encryption. SSE encrypts messages as soon as Amazon SQS receives them. Amazon SQS stores messages in encrypted form and decrypts them only when sending them to an authorized consumer. Read more about SSE of SQS.

Encryption using keys managed in the AWS Key Management Service (KMS)

Integration between Amazon SQS and the AWS Key Management Service (AWS KMS) allows for seamless management of KMS keys for server-side encryption (SSE) purposes. The KMS key that you assign to your queue must have a key policy that includes permissions for all principals that are authorized to use the queue. Read more about configuring server-side encryption (SSE) for a queue (console) here.

AWS KMS permissions

Every KMS key must have a key policy. We cannot modify the key policy of an AWS-managed KMS key. The policy for this KMS key includes permissions for all principles in the account which are authorized to use Amazon SQS.

For a customer-managed KMS key, we must configure the key policy to add permissions for each queue producer and consumer. To achieve this, we designate the producer and consumer as users within the KMS key policy.

KMS permissions

Multiple services function as event sources capable of sending events to Amazon SQS queues. To allow these event sources to work with encrypted queues, we must create a customer-managed KMS key and add permissions in the key policy for the service to use the required AWS KMS API methods.

Following is the KMS policy which we need to add while creating the key.

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {

"Service": "sqs.amazonaws.com"

},

"Action": [

"kms:GenerateDataKey",

"kms:Decrypt" ],

"Resource": "*"

}]

}

Challenges faced while encrypting SQS

In the production environment, most of the consumers and the producers are not explicitly configured in the same AWS region or the account. To overcome this issue we need to create a customer-managed key with multi-region access with all the policies in place which can affect the overall data flow.

This is how we encrypted the messages we gathered from the different components we used so far. Now we will look into S3 which is used as static content storage for some of the applications.

Encryption in S3

S3 - Simple Storage Service - is a cloud storage service that enables businesses and individuals to store and retrieve data over the Internet. It provides a highly scalable, reliable, and secure object storage infrastructure that can store and retrieve any amount of data from anywhere. S3 is used for storing static website content, backups, data archives, and media files thus making it a popular choice for businesses of all sizes.

The S3 bucket is encrypted at-rest using the Amazon S3 key (SSE-S3), and for in-transit encryption to be enabled for all the buckets, we need to add a bucket policy to prevent Non-HTTPS connections. To be encrypted End to End, we need to have S3 encrypted at-rest and in-transit.

Enabling encryption at-rest

To encrypt all the S3 Bucket at-rest, we need to add server-side encryption using SSEAlgorithm AES256. This can be done using AWS Boto3 SDK or AWS CLIi in combination with a bash script.

Below is a Python code snippet for the same:

def check_and_enable_sse(self,bucket):

try:

response = self.client.get_bucket_encryption(Bucket=bucket)

print(f"Server Side Encryption is already enabled on this Bucket

{bucket}")

except botocore.exceptions.ClientError as error:

if error.response['Error']['Code'] ==

'ServerSideEncryptionConfigurationNotFoundError':

print(f"Server Side Encryption is not enabled hence enabling for

bucket {bucket}")

self.client.put_bucket_encryption(

Bucket=bucket,

ServerSideEncryptionConfiguration={

'Rules': [

{

'ApplyServerSideEncryptionByDefault': {

'SSEAlgorithm': 'AES256',

},

},

]

})

We can also set organization level SCP and allow S3 bucket creation only if encryption flag is set.

Enabling in-transit encryption

We came across a scenario for S3 where we found that some of the S3 buckets are hosted as static websites for our APIs. So, to overcome the situation, we created different approaches for the buckets hosting static websites and buckets which are not hosting static websites.

For Buckets that are hosting static websites, we are using CloudFront. We will need to add the bucket policy of AllowSSLRequestsOnly to the static website S3 buckets. You can read more about how to secure data on S3.

To encrypt the other S3 buckets, we need to add BucketPolicy which allows only HTTPS connection also that complies with S3-bucket-SSL-requests-only rule. The policy explicitly denies all actions on the bucket and objects when the request meets the condition “aws:SecureTransport”: “false”.

Bucket Policy:

{

"Id": "ExamplePolicy",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowSSLRequestsOnly",

"Action": "s3:*",

"Effect": "Deny",

"Resource": [

"arn:aws:s3:::DOC-EXAMPLE-BUCKET",

"arn:aws:s3:::DOC-EXAMPLE-BUCKET/*"

],

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

},

"Principal": "*"

}

]

}

Implementation steps

We created a python Script with the below steps using the Boto3 Library:

- List all bucket names.

- Enable Server Side encryption for buckets if not enabled.

- Check the bucket which has been used for hosting the website.

- Select the bucket which is not used for the hosting website.

- Check the selected buckets for the already added policy of S3-bucket-SSL-requests-only.

- If not, then Add the SSL Policy to the bucket.

Challenges faced while encrypting S3

-

For at-rest encryption of new S3 objects as we were using the Boto3 Library and invoking the get bucket object for each bucket this was causing the script to run for a longer duration, So we had to take the Route of Multi-threading to decrease the execution time of the Script.

-

At-rest encryption for existing objects in the bucket. There are two ways to handle it:

- To encrypt existing S3 objects in place, you can use the Copy Object API. This copies the objects with the same name and encrypts the object data using server-side encryption.

- Encrypting objects with Amazon S3 Batch Operations, we used Amazon S3 Batch Operations along with S3 copy object to identify and activate S3 Bucket Keys encryption on existing objects

Option A was a manual process and needed to be done for multiple buckets which was very time-consuming, so we went ahead with option B, where we used Amazon S3 Batch Operations which allows us to perform repetitive or bulk actions like copying or tagging multiple objects with a single request.

Using the approaches and tools mentioned above, we were successfully able to encrypt the contents within our S3 bucket. While the S3 bucket is mostly used for storing objects and files, AWS RDS is used for deploying, storing, and managing relational databases. Let us see how encryption works in the case of AWS RDS.

Encryption in RDS

RDS (Relational Database Service) is a managed cloud database service that provides an easy-to-use and scalable platform for deploying, operating, and scaling relational databases in the cloud. RDS supports popular database engines such as MySQL, PostgreSQL, Oracle, and Microsoft SQL Server, and offers features such as automated backups, automated software patching, and automated scaling.

Let us see how we can encrypt RDS for a more secure setup.

Encryption at-rest

Data encryption at-rest refers to encrypting the underlying storage for the DB instances, its automated backups, read replicas, and snapshots. The RDS resources are encrypted using AWS KMS keys which can be the default AWS KMS Key or Customer-Managed Key by configuring DB Encryption.

The Server Side Encryption needs to be enabled during DB creation. In case SSE wasn’t configured during creation, the existing DB can be encrypted at-rest by copying the DB snapshot with a KMS key and restoring it on a newly created DB with SSE enabled.

There are some caveats and considerations that need to be done before we enable SSE for an AWS RDS instance. Please refer to the official AWS RDS documentation to know all the details and limitations of DB encryption.

Encryption in-transit

TLS/SSL provides security for data in-flight between the client and AWS RDS instance. By enabling TLS, we want to validate that the connection is being made to the AWS RDS instance which is done by checking the server certificate that is automatically installed on all DB instances.

Each DB Engine has its method of implementing TLS encryption. Please refer to the official AWS documentation for the process of implementation for various DB.

Additional steps to enable in-transit encryption

-

TLS Verification: Once TLS is enabled for AWS RDS, we need to verify that all the connections are secure as intended. Clients need to be configured to trust the certificates issued using AWS PCA. This can be performed by adding the AWS PCA Root CA certificate on the client.

-

Client Compatibility: The Client needs to be configured with “sslmodes” (all, require, verify-full, etc) compatible with the application library. Check out how to configure it for various clients.

Encryption in SNS

Amazon Simple Notification Service is a managed service that provides message delivery from publishers to subscribers.

Publishers and subscribers interact asynchronously through message exchange using a topic, which serves as a communication channel. Clients can subscribe to the topic and receive messages sent to it. They can utilize various supported endpoint types, including Amazon Kinesis Data Firehose, Amazon SQS, AWS Lambda, HTTP, email, mobile push notifications, and mobile text messages.

There are two types of encryption associated with the SNS:

- In-transit

- Server side encryption

In-transit encryption

In-transit encryption is enabled by default in the SNS service.

Server-side encryption (SSE)

Server-side encryption lets us transmit sensitive data in the encrypted queue. SSE protects the contents of the messages in the queues using the encryption keys. SSE ensures that the content of a message within an Amazon SNS topic is encrypted.

SSE doesn’t encrypt the following:

- Topic metadata

- Message metadata

- Per-topic metrics

Read more about the SSE for SNS.

KMS permissions

Several services act as event sources that can send notifications to Amazon SNS. To allow these event sources to work with encrypted SNS, we must create a customer-managed KMS key and add permissions in the key policy for the service to use the required AWS KMS API methods.

Following is the KMS policy which we need to add while creating the key.

{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {

"Service": "sns.amazonaws.com"

},

"Action": [

"kms:GenerateDataKey",

"kms:Decrypt"

],

"Resource": "*"

}]

}

Challenges enabling encryption in SNS

- There are multiple components which may be using the same SNS for the notification service of the different producers and consumers.

- To incorporate the entire framework to flow data seamlessly we need to add policies to the KMS correctly.

In the above sections, we saw that by encrypting sensitive data at-rest and in-transit, organizations can ensure that data is protected from unauthorized access, both inside and outside of AWS for various AWS solutions.

Summary

Security is the major constraint while designing a system architecture and encryption plays a major role in securing communication between different components of the architecture. Ensuring the security of data and systems is a critical aspect of any cloud computing environment. AWS provides a suite of security features that can help organizations protect their data and applications, including encryption at-rest and in-transit, network security, access control, and compliance certifications.

In this blog post, we saw how we can configure encryption for multiple AWS offerings like Firehose, SQS, S3, RDS, and SNS to name a few. You can configure encryption for other offerings as well in a similar way.

If you are using AWS, it is important to familiarize yourself with these security features and incorporate them into your AWS environment. This will help you mitigate security risks and protect your business against potential data breaches or cyber-attacks.

For more assistance please feel free reach out and start a conversion with Akash Warkhade, Amit Kulkarni, Aashi Modi, Sayed Belal & Sumedh Gondane who have jointly written this detailed blog post.

Looking for help with securing your infrastructure or want to outsource DevSecOps to the experts? Learn why so many startups & enterprises consider us as one of the best DevSecOps consulting & services companies.

References

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.