Migrating Jenkins Freestyle Job to Multibranch Pipeline

Anyone who recently started working on Jenkins or a couple of years ago, would by default go with creating pipelines for their CI/CD workflow. There is a parallel world, people who have been using Jenkins from its inception, who didn’t get on a foot race with new Jenkins features and stayed very loyal to “Freestyle jobs”. Don’t get me wrong, Freestyle job does the work, can be efficient and a simple solution if you have a 1 dimensional branching structure in your source control. In this post, I would discuss why switching to Multibranch Pipeline from Freestyle Job was needed for one of our enterprise customers and how it has made their life easy.

Freestyle Vs Pipeline jobs

Freestyle jobs are suitable for simple CI/CD workflow accompanied by a simple branching strategy. If you have multiple stages in your CI/CD design, then it’s not the right fit. That’s where the Pipeline enters.

A Pipeline job is an aggregation of all the stages or steps (build, test and deploy, etc) as one unit, comprising multiple stages, which are by default run in sequential order but can be run in parallel as well. All these steps can be defined in a file as code, called Jenkinsfile via Pipeline Domain-Specific Language (DSL). This file can be committed to Git, this is a step towards attaining CI/CD-as-Code. You can know more about Pipelines and how to write Jenkinsfile in its documentation, Jenkins Pipeline.

Why did we move to Multibranch Pipeline?

Before moving to Pipeline, we used to create a separate job for each environment (dev, qa, uat and production) which was time taking. The number of jobs kept on increasing which became really hard to manage. Pipeline jobs made complete sense in our case because we had a standardized branching strategy in our version control, with each branch mapping to each environment. In most cases, the code was consistent across branches controlled by variables, which led to creating a generic Jenkinsfile.

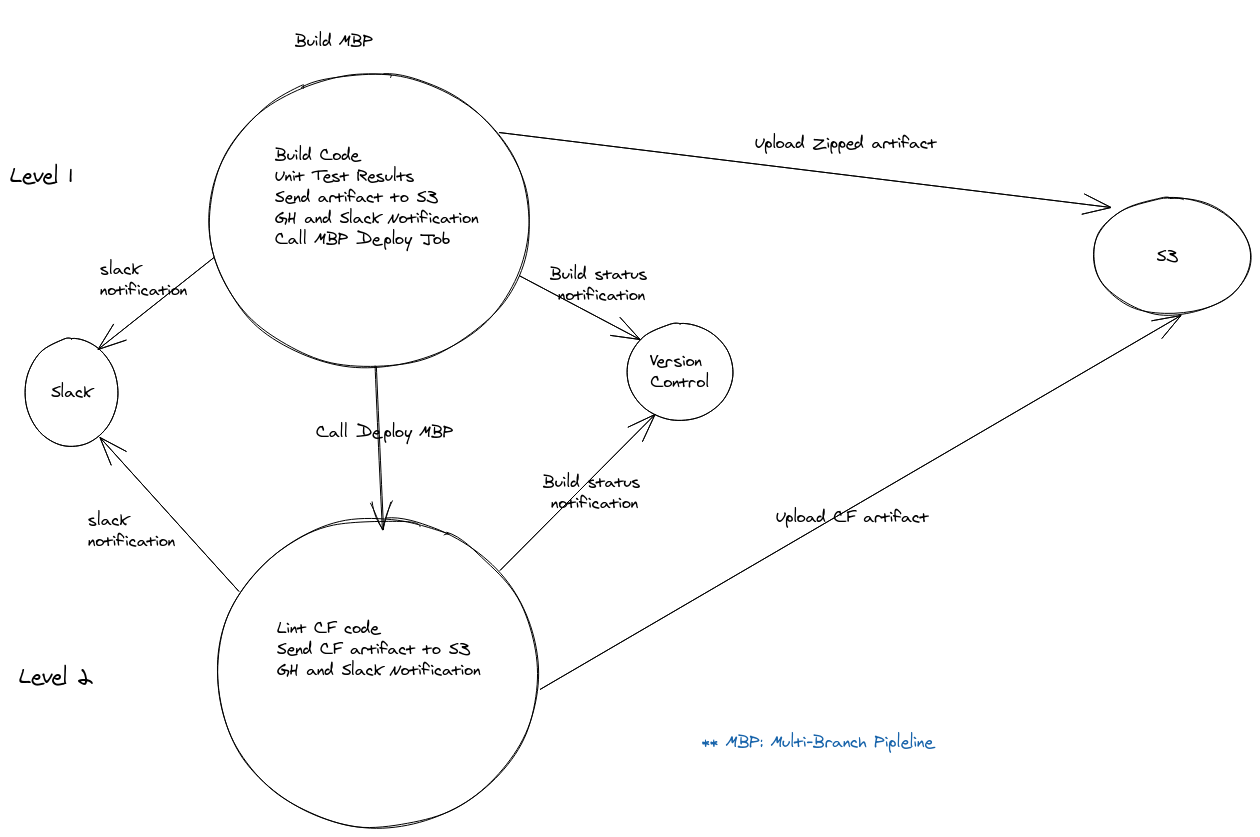

Our CI/CD flow: For the sake of simplicity, I’m going to consider a two level CI/CD Pipeline, in the first level, We build our code, send the package to S3 and call the deployment job which is also a Multibranch Pipeline job. In the second level linting of CloudFormation code and sending the artifact to S3 is done. Take a look at the image below to understand the flow:

Sample Jenkinsfile Template

Please note, each stage of the pipeline is run in a Kubernetes pod, please refer the documentation to know more.

#!groovy

@Library('jenkins-pipeline-shared@master') _

// slack channel where you want to send the job notification

def slack_channel = 'slack_channel'

// Bucket name where you want to send the artifact

def bucketName = "bucket_name"

//Downstream Job Deploy

def deployJob = "Deploy_job"

pipeline {

agent {

kubernetes {

label "node-build"

defaultContainer 'jnlp'

yaml """

apiVersion: v1

kind: Pod

metadata:

labels:

docker: true

spec:

containers:

- name: git

image: alpine/git

command:

- cat

tty: true

- name: node

image: node:12.19-alpine

command:

- cat

tty: true

"""

}

}

options {

buildDiscarder(logRotator(numToKeepStr: '10'))

skipDefaultCheckout true

timestamps()

}

stages {

stage ('Checkout') {

steps {

container('git') {

script {

## SCM checkout

)

}

}

}

}

stage('NPM install') {

steps {

container('node') {

script {

sh """

## Build Commands

"""

}

}

}

}

stage('run tests') {

steps {

## Junit commands

}

}

stage('Upload Artifacts') {

steps {

container('node') {

script {

## Plugin to upload artifact to S3

}

}

}

}

}

}

post {

always {

sendNotifications currentBuild.result, "Job: '${env.JOB_NAME}', VERSION: 'commit-${commit}-build-${env.BUILD_NUMBER}'", slack_channel

}

success {

bitbucketStatusNotify (

## Build Status Notify

)

script {

if ( branch == 'develop') {

build(job: "${deployJob}" + "/" + "${branch}".replaceAll('/', '%2F'))

}

if (branch == 'release/1.0.0') {

build(job: "${deployJob}" + "/" + "${branch}".replaceAll('/', '%2F'))

}

if (branch == 'master') {

build(job: "${deployJob}" + "/" + "${branch}".replaceAll('/', '%2F'))

}

}

}

unsuccessful {

bitbucketStatusNotify (

buildKey: env.JOB_NAME,

buildName: env.JOB_NAME,

buildState: 'FAILED',

repoSlug: 'repo_name',

commitId: commit

)

}

}

What are benefits of Jenkins’ multi-branch pipeline?

CI/CD as code: We are transitioning to a phase where each component of the infrastructure is written as code. Pipeline helped us achieve that. We are tracking every change to the CI/CD workflow by maintaining it with application code in version control. Now we have audit trails, code reviews, and automatic creation of Pipeline jobs for each branch.

Reducing the number of jobs: Earlier we used to create a job for each branch, as the number of applications increased the number of jobs also increased. By creating Multibranch Pipeline the number of jobs reduced by 75 percent. This is a huge drop, and our Jenkins box looks a lot cleaner now.

CI part of the Pipeline: Before moving to pipelines, we used to have a dedicated CI job for each application. Now CI is a part of the one pipeline job. We are achieving this by “when” clause of Groovy language. Whenever the branch is anything apart from develop, release, and master, it doesn’t upload the artifact and doesn’t call the downstream Deployment pipeline, which leads to deployment.

when {

anyOf { branch 'develop'; branch 'release/*'; branch 'master' }

}

Extensibility: One of the strong points of Jenkins is the number of plugins it provides, but in certain scenarios, the scope of things you can do is limited, which can be a bottleneck. Jenkinsfile gives you the ability to write Groovy code to solve those scenarios which are not achievable by the plugin.

Better Troubleshooting when the Job fails: Since the whole Pipeline is divided into multiple stages, whenever there is an issue you can pin-point at which stage the error happened and the troubleshooting becomes more focused.

Easy migration from one Jenkins to another: With the use of Jenkinsfile, migration of jobs from one jenkins to another has become very easy.

Challenges faced during freestyle to multi-branch pipeline migration

Here are few challenges which we faced during the migration:

Forward Slash (/) is converted to %2f in the job name: It’s a common practice to use a forward slash (/) when creating feature branches within developer teams, so whenever a new Pipeline gets created automatically with the creation of a new branch, / will get converted into %2f. Whenever you are referencing the branch name in the job or calling any job as a downstream, you will have to make sure of this conversion. Look at the below example where we are trying to call a downstream project.

if (branch == 'release/1.0.0') {

build(job: "${deployJob}" + "/" + "${branch}".replaceAll('/', '%2F'))

}

Migration of large number of Freestyle jobs to Pipeline is time-taking: If you have a lot of jobs to migrate like we had, then it becomes a little tedious and time taking. It’s less tedious if you have a consistent build process followed for all applications. There is a Jenkins project which can partially assist you to convert your Freestyle jobs to declarative Jenkinsfile, Declarative Pipeline Migration Assistant plugin

The reason why I said it will partially assist you, because it doesn’t convert all the plugins to declarative syntax, but it’s a good start to get your Jenkinsfile structure ready, and then you can build on the top of that.

If you are building pipelines from scratch, I would recommend you to use Blueocean Plugin, it provides sophisticated visualizations and can create a basic level Pipeline within a minute just by clicks.

Not all plugins work as expected with Pipeline: There are incidents when few plugins were working fine with Freestyle jobs but not with the Pipeline (like artifactory plugin). In some cases, for example, to render the Jenkin parameters dynamically, using plugins like Active Choices parameter becomes critical.

Conclusion:

Migrating from Freestyle jobs to Pipelines has reduced our number of jobs by almost 75%. Effort and time required to create new jobs for any new application have reduced drastically. And there is more consistency in the way our CI/CD workflow is getting designed now. I personally would highly recommend making a switch to pipelines if you haven’t yet!

Hope the article was helpful to you. Do try this process and share your experience in the comments section below or start a conversation on Twitter and LinkedIn

Happy Coding :)

Looking for help with building your DevOps strategy or want to outsource DevOps to the experts? learn why so many startups & enterprises consider us as one of the best DevOps consulting & services companies. Or looking for Jenkins support, explore our Jenkins consulting and enterprise support capabilities.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.