Model Context Protocol: The USB-C for AI: Simplifying LLM Integration

With new models dropping almost daily, developing AI applications mirrors computers’ pre-standardization era, when connecting devices meant juggling multiple cables and connectors along with custom drivers. Building an AI application today is complex. Developers must deal with different integration codes to connect LLMs with different external data sources, vendors’ specific implementations, and security concerns to top them all. These lead to fragile systems and developer overhead with the constant risk of vendor lock-in.

While USB brought standardization for connecting peripheral devices, there was a growing need for a USB-type standard for AI apps, too. This is precisely the problem that the Model Context Protocol (MCP) solves. It has the potential to become a universal standard to simplify how AI models interact with external systems.

In this blog post, we’ll explore MCP and its standardized approach to connecting large language models (LLMs) with different data sources, tools, and services.

Why MCP?

Large Language Models are transformative when they have access to the right context, but connecting these models to necessary data sources and tools has become a significant bottleneck in AI development. Current approaches force teams to build custom integrations for each use case, leading to fragmented codebases and duplicated effort across the industry.

MCP emerges as a response to four critical challenges that impede efficient, secure AI integration

- Lack of standardization: There are no common and standardized interfaces for integrating LLMs with data sources and tools. Developers are forced to create custom integration for data sources and external tools. This leads to a fragmented codebase, resulting in inconsistent implementations.

- Vendor lock-in: Using proprietary integrations carries the risk of vendor lock-in. Being tied to a particular vendor means increased switching costs and limited flexibility as your needs and the technology evolve.

- Integration complexity: Developing and maintaining different custom integration tools requires specialized knowledge. This also slows down the development process and creates unstable systems that can break when underlying components change.

- Security risks: Inconsistent security implementations across custom integrations create vulnerabilities that can become extremely dangerous, as attackers can gain access to sensitive data and critical systems.

What is MCP?

Model Context Protocol (MCP) is an open source protocol developed by Anthropic (the team behind Claude) to standardize AI integrations. Being open source, MCP aims to foster collaboration and establish a universal standard that benefits the entire AI ecosystem.

MCP provides a standardized method for connecting LLM apps with external data sources and tools. It provides implementation guidelines to create a universal interface layer between AI models and the context they need to function. MCP establishes common patterns for data access, tool execution, and prompt management, saving developers from building custom integrations. This enables developers to focus on building flexible AI apps that have seamless access to files, databases, APIs, and other resources without being tied to a proprietary implementation.

So, you do not need to write a custom integration for Claude or a different one for Perplexity to connect to your product’s documentation and other internal tools. With MCP, you can implement a single protocol that allows your AI app to access all the resources seamlessly through standardized requests.

MCP - architecture & components

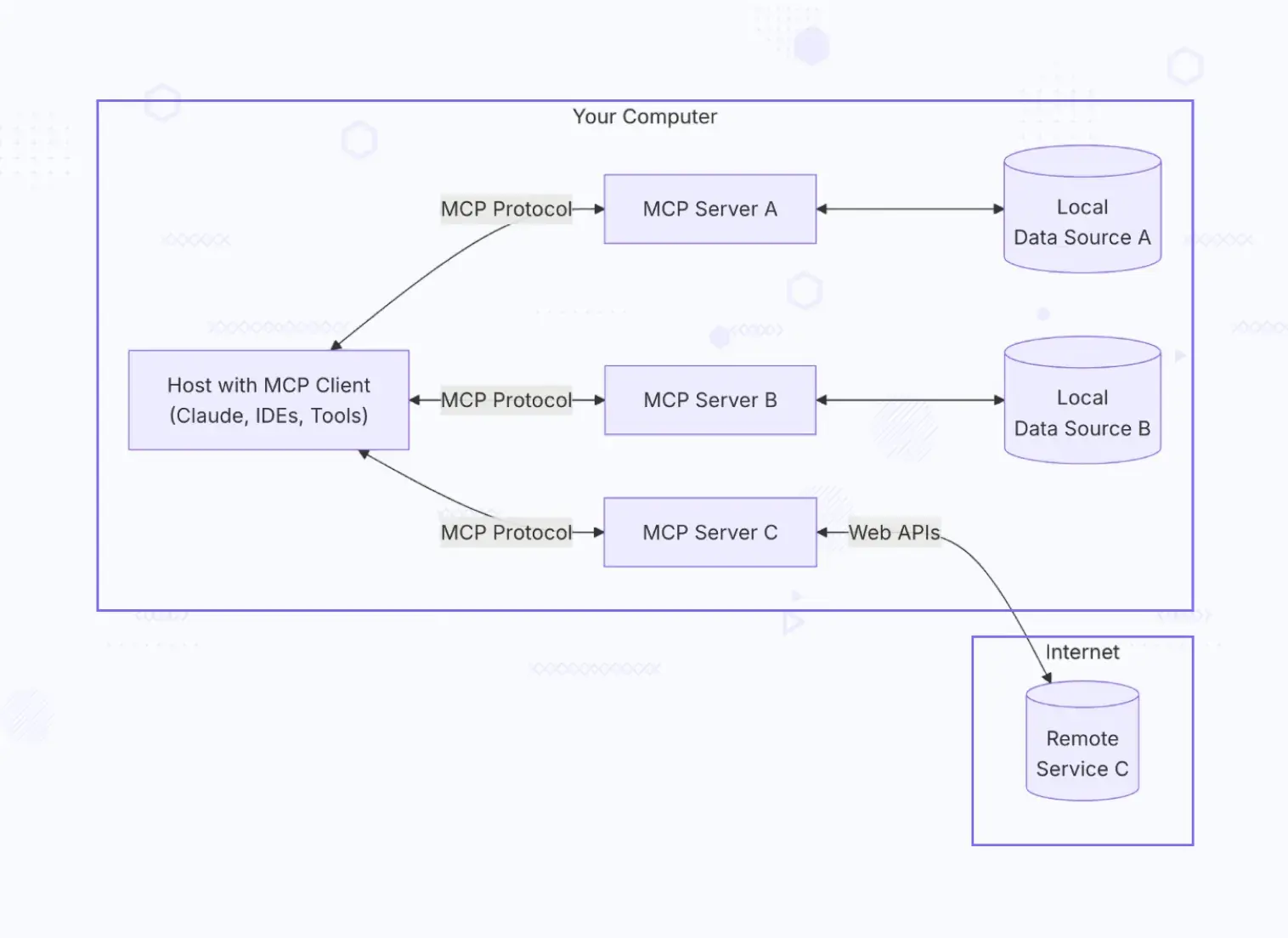

MCP follows a client-server architecture and comprises 5 key components that work harmoniously to create secure and standardized connections between LLMs and external resources.

- MCP Hosts: These are the apps that need contextual AI capabilities. These applications could be chatbots, AI-enhanced IDEs, or custom applications. These hosts integrate with the MCP client to access data and external tools through the protocol.

- MCP Clients: Clients are responsible for maintaining the 1:1 connection with MCP Servers. They handle the protocol specifics that the server doesn’t have. Clients translate host requests into standardized MCP messages, manage connections, and also handle authentication with servers.

- MCP Servers: These are the core of the ecosystem and expose critical capabilities through the standardized MCP interface. A server might provide access to databases or integrate with external tools and remote APIs - all through a consistent protocol.

- Local Data Sources: These are the local files, databases, and services that MCP servers can securely access. The protocol provides granular permissions that ensure AI models access only authorized resources.

- Remote Services: These are external services available over the Internet via APIs that MCP servers can connect to. They might include knowledge bases, code bases, or specialized tools.

This architecture is modular and composable, so organizations can implement different servers for different data sources while having a consistent interface. This also ensures the separation of concerns as the hosts focus on AI functionality, clients handle protocol details, and servers manage data access and tool execution.

Understanding the protocol specification

MCP provides a blueprint for developers to build MCP clients or servers. It helps understand how various components interact with each other and standardizes message formats, interaction patterns, and error handling for consistent implementation.

Core communication patterns

MCP is built around three primary message types:

- Requests: These are messages sent by clients to servers to initiate actions or retrieve information. Each request has a unique ID that correlates with its corresponding response.

- Response: Messages sent from servers to clients in reply to requests. These contain the requested information or status as requested.

- Notifications: These are async messages sent from servers to clients without an associated request. They inform clients about relevant state changes or events.

These three enable both synchronous (fetching a response from a tool) and asynchronous (notifying the completion of an execution) communication.

Apart from these core communication patterns, there are a few key protocol concepts:

- Resources: These are abstractions for data that can be accessed by LLMs. These can be local files, databases, results from an API call, or generated content from a tool execution. These resources have unique IDs that can be referenced across the protocol, allowing LLMs to request specific information. By standardizing how resources are identified and interacted with, MCP creates a consistent interface for working with data.

- Tools: Tools represent the actions that LLMs can perform through MCP servers. They enable functional capabilities like searching through repositories, querying databases, executing code, or sending emails. Each tool has a well-defined interface specifying the required arguments and return values, standardizing the way LLMs interact with external systems through a consistent interface.

- Prompts: Prompts provide a way to define a reusable prompt template for prompts and workflows. These create standardized interaction patterns, define multi-step workflows where output from one step is the input for the other, enable sharing of prompts across applications and help versioning pompts. By doing so MCP enables building structured and manageable AI applications.

Content types for rich content

MCP also supports different content types to enable rich interactions between LLMs and external systems. Some of these are:

- TextContent: Represents plain text or markdown text that can be directly incorporated into LLMs.

- ImageContent: Enables sharing of visual content between models capable of processing images.

- EmbeddedResource: Allows different resources to refer to one another, creating a hierarchy of information that LLMs can interact with.

How MCP works: a simplified example

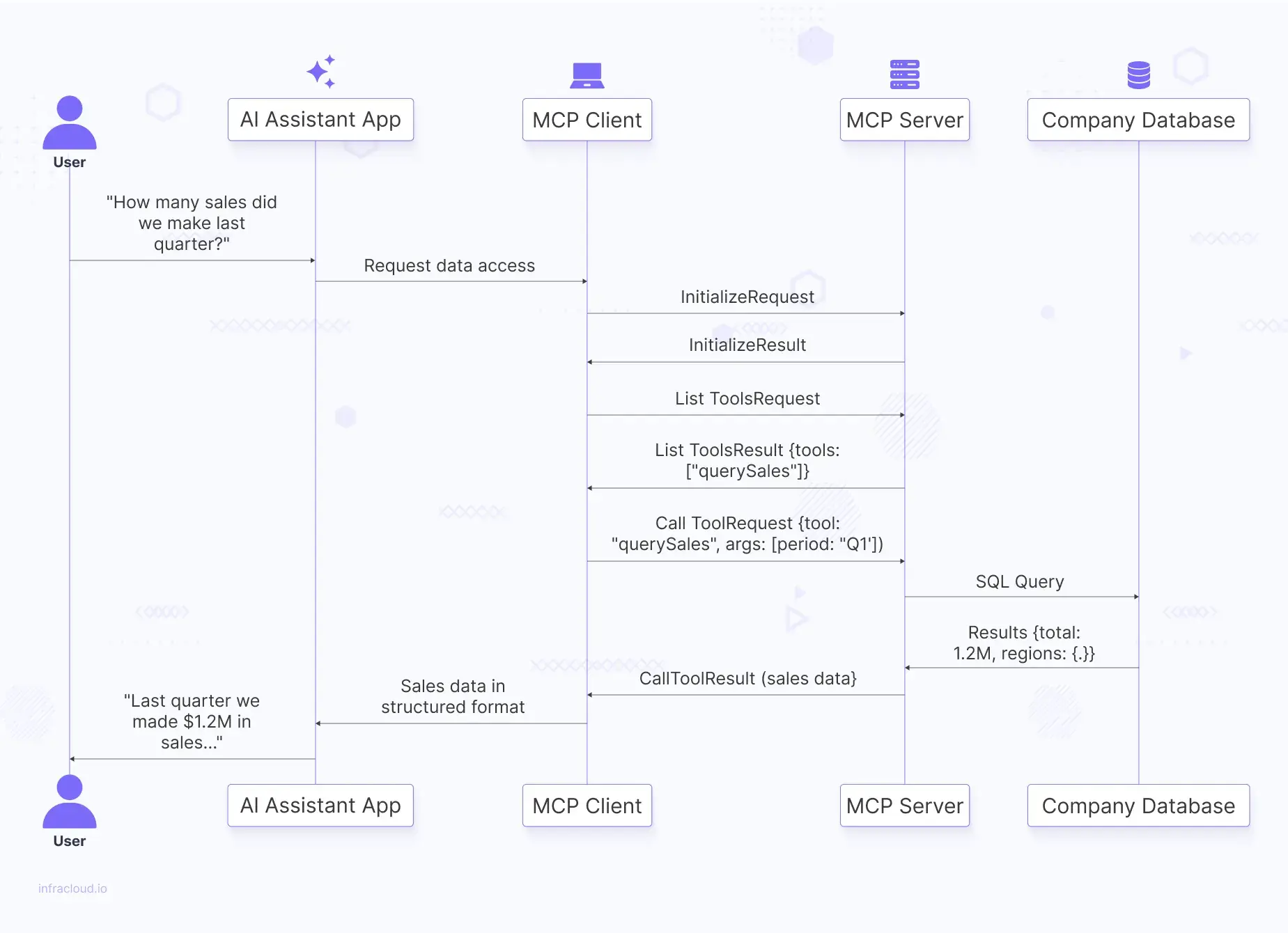

Suppose your organization has built an AI agent that helps you interact securely and efficiently with enterprise databases and sales data.

User Request

In this example, you ask your AI assistant app, “How many sales did we make last quarter?” by typing your query into the AI app.

Initialization

Behind the scenes, the AI assistant app uses an MCP client to connect to your company’s MCP server. The client sends an InitializeRequest message to establish the connection.

{

"jsonrpc": "2.0",

"id": 1,

"method": "initialize",

"params": {

"protocolVersion": "1.0"

}

}

The server acknowledges the request and responds with a list of capabilities it supports.

{

"jsonrpc": "2.0",

"id": 1,

"result": {

"protocolVersion": "1.0",

"capabilities": {

"tools": true

}

}

}

Tool Discovery

The client needs to know what the tools that are available with the MCP Server, so it sends a ListToolsRequest

{

"jsonrpc": "2.0",

"id": 2,

"method": "listTools",

"params": {}

}

The server responds with a query listing the tools available.

{

"jsonrpc": "2.0",

"id": 2,

"result": {

"tools": [

{

"name": "querySales",

"description": "Query sales data by period",

"parameters": {

"period": {

"type": "string",

"description": "Time period (e.g., Q1, Q2, 2023)"

}

}

}

]

}

}

Tool Execution

The AI assistant determines that quarterly sales data is needed and instructs the client to make the appropriate tool call.

{

"jsonrpc": "2.0",

"id": 3,

"method": "callTool",

"params": {

"toolName": "querySales",

"arguments": {

"period": "Q1"

}

}

}

Data Retrieval

The MCP server receives this request, validates it against security and access policies, and then queries the company database. The database returns the results, which the server formats and sends back to the client.

{

"jsonrpc": "2.0",

"id": 3,

"result": {

"totalSales": 1200000,

"regions": {

"East": 450000,

"West": 350000,

"North": 250000,

"South": 150000

},

"topProducts": [

{

"name": "Enterprise Solution",

"revenue": 500000

},

{

"name": "Professional Services",

"revenue": 300000

}

]

}

}

Response Generation

The AI assistant receives this structured data through the MCP client and formulates a natural language response: “Last quarter, we made $1.2M in sales, with the Eastern region performing best at $450K. Our top-selling product was Enterprise Solution, accounting for $500K of our revenue.”

Below is the sequence diagram of the above process.

Getting started with MCP

Implementing MCP is straightforward and enables you to build scalable and flexible AI applications. Depending on your role within the team, there are different ways to get started with MCP.

- Server developers: If you’re predominantly working with server-side, the MCP documentation for server developers contains comprehensive server implementation guides. These guides cover everything from basic server setup to advanced topics like security, permission models, and handling complex resource types.

- Client developers: Client-side SDKs are available to client-side developers to simplify the integration process. The MCP client developer documentation includes client implementation patterns, authentication best practices, and example code for common integration scenarios.

- Claude Desktop users: If you’re using Claude Desktop and want to leverage existing MCP servers, you’ll find user guides for Claude Desktop Users explaining how to connect to servers, authorize access, and use the enhanced capabilities that MCP servers provide within the Claude interface.

In addition, there are dedicated SDKs available for Python, Java, Kotlin, and TypeScript, which have comprehensive documentation, quickstart guides, API references, and sample applications to show common integration patterns.

Summing it up

Model Context Protocol is truly the USB standard that was needed to address the integration challenges that have hindered AI development. MCP removes the friction and redundancy that previously characterized AI integration workflows by establishing a standardized approach to connecting LLMs with external data sources and tools.

Much like how HTTP standardized web communications or SQL standardized database interactions, MCP creates a common language for AI systems to interact with the world around them.

To learn more about the latest in AI, subscribe to our AI-Xplore webinars. We hold regular webinars and host experts in AI to share their knowledge. Do share your thoughts on this article and how you use AI, as well as any interesting use cases that you have with me. Connect with me on LinkedIn or Twitter.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.