About the webinar

Did you know that 90% of ML models never make it into production? Even among the few that do, many face critical challenges such as slow prediction times and limited device memory. This happens because ML models are typically trained on high-performance GPUs but then deployed to comparatively low-resource hardware to reduce cloud computing costs. This is where optimization comes in without compromising on performance. By optimizing these models, we can reduce their size, improve inference speed, and ultimately make them more scalable for wider adoption. In this AI webinar, we'll dive into practical strategies for optimizing ML models, including quantization, pruning, distillation, and KV cache compression.

Once the model is optimized and deployed, we could serve it via APIs like REST and gRPC, making it accessible and functional for applications or end-users. In the webinar, we’ll explore the backend infrastructure and components required and the techniques to optimize model building and serving. You’ll discover how to keep your ML models highly scalable and capable using parallelism and scaling strategies.

Starting with a pre-trained model from Hugging Face, we will walk you through the process of optimizing it for efficient inference and deploying it to a custom AI lab environment. Our AI experts shared the challenges of optimizing models for inference, different optimization techniques, and improving the performance of ML models in production. We also covered making the deployed model accessible and scalable while balancing speed, accuracy, and resource efficiency — all in a live demo.

What to expect

- Model deployment: End-to-end process of taking a pre-trained model from HuggingFace and deploying it to a production-ready environment.

- Model optimization: Practical ML optimization strategies, including quantization, pruning, distillation, and KV cache compression to significantly enhance model efficiency, reduce latency, and optimize resource usage.

- Model serving: Making the ML models ready for use by apps and end users via standardized and secure REST and gRPC APIs while tuning them for high efficiency using parallelism and scaling techniques.

- Common challenges: Actionable insights to overcome real-world issues in ML deployment & serving, like large model sizes, slow prediction times, and limited device memory, and find a balance between model performance, resource limitations, and deployment requirements.

- LIVE demo: Hands-on demonstration where we’ll import a model from Hugging Face, apply optimization techniques, deploy it to our AI lab, and showcase its performance through live API calls, all while explaining each step and its impact.

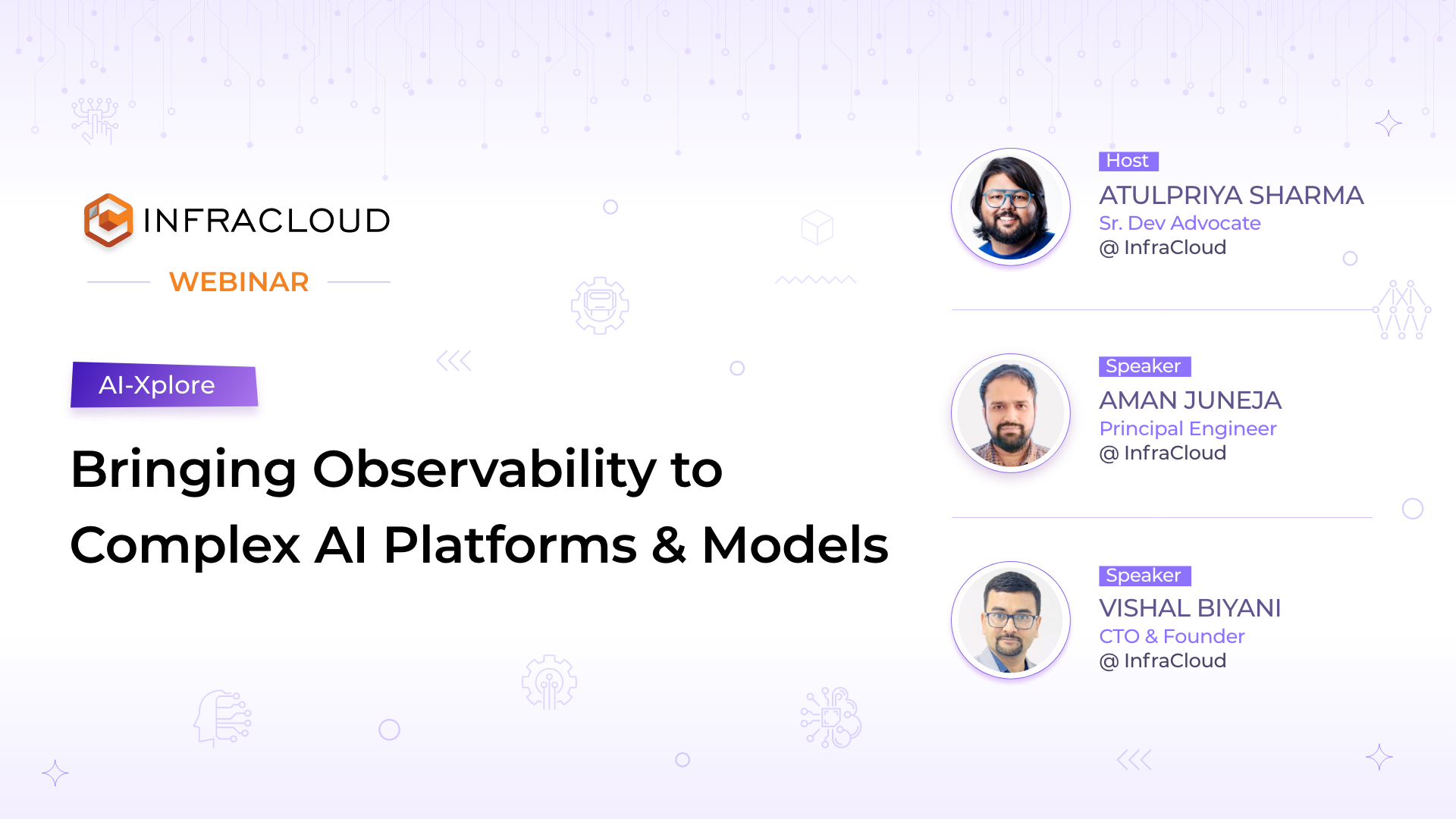

Meet the Speakers

Manual tester turned developer advocate. Atul talks about Cloud Native, Kubernetes, AI & MLOps to help other developers and organizations adopt cloud native. He is also a CNCF Ambassador and the organizer of CNCF Hyderabad.

Aman specializes in AI Cloud solutions and cloud native design, bringing extensive expertise in containerization, microservices, and serverless computing. His current focus lies in exploring AI Cloud technologies and developing AI applications using cloud native architectures.

Sanket Sudake specializes in AI Cloud initiatives and building cloud-native platforms. He is a Fission Serverless platform maintainer with deep expertise in distributed systems, containers, and cloud environments.

Need a clear starting point to build your own AI lab?

Leverage our AI stack charts to empower your team with faster, more efficient AI service deployment on Kubernetes.